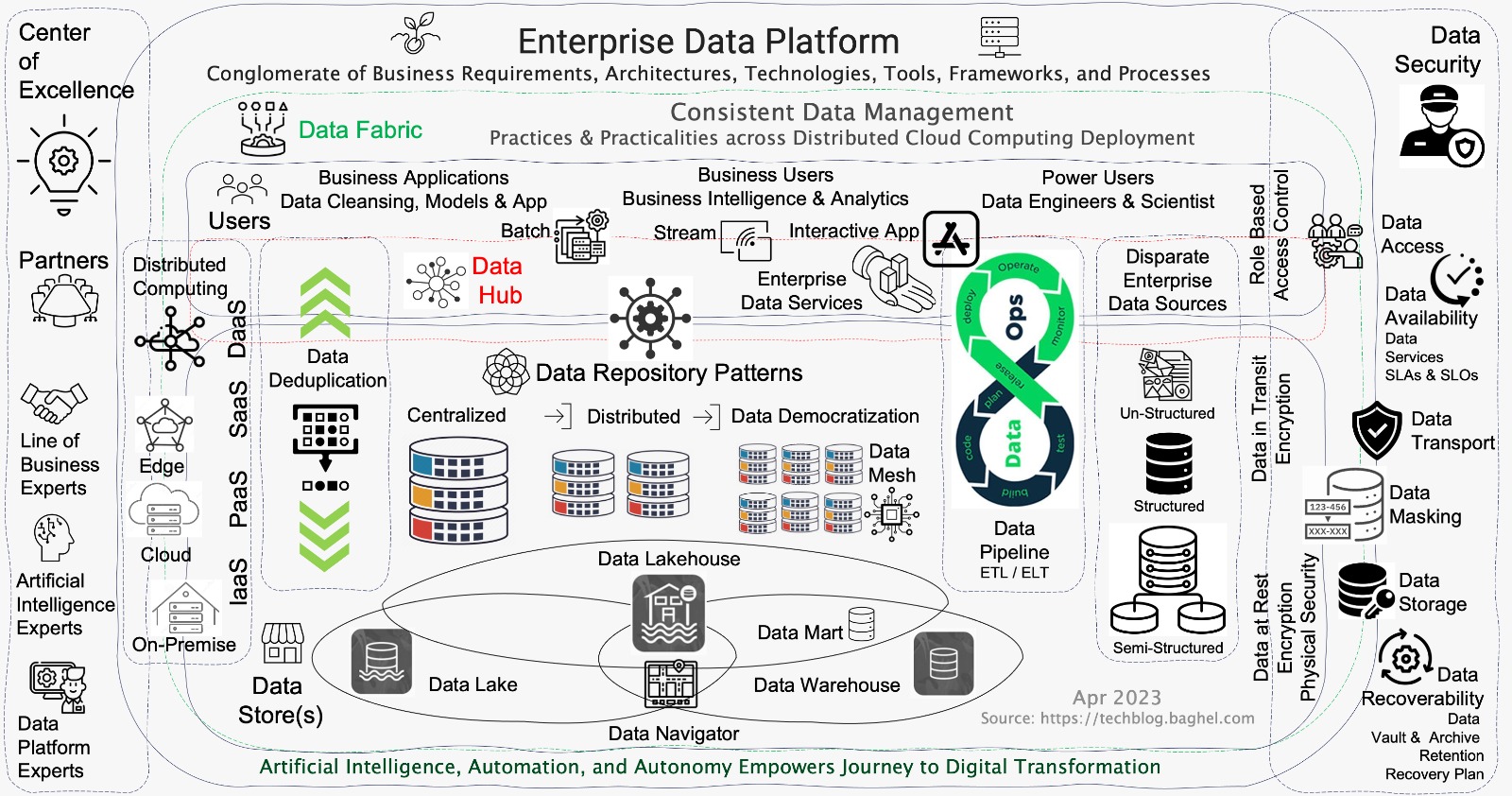

Modern Enterprise Data Platforms are a conglomerate of Business Requirements, Architectures, Tools & Technologies, Frameworks, and Processes to provide Data Services.

Data Platforms are mission-critical to an enterprise, irrespective of size and industry sector. Data Platform is the foundation for Business Intelligence & Artificial Intelligence to deliver sustainable competitive advantage for business operations excellence and innovation.

Data Platforms are mission-critical to an enterprise, irrespective of size and industry sector. Data Platform is the foundation for Business Intelligence & Artificial Intelligence to deliver sustainable competitive advantage for business operations excellence and innovation.

- Data Store is a repository for persistently storing and managing collections of structured & unstructured data, files, and emails. Data Warehouse, Data Lake, and Data Lakehouse with Data Navigator are the specialized Data Stores implementations to deliver Modern Enterprise Data Platforms. Data Deduplication is a technique for eliminating duplicate copies of repeating data. Successful implementations of Data Deduplication optimize storage & network capacity needs of Data Stores is critical in managing the cost and performance of Modern Enterprise Data Platforms.

- Data Warehouse enables the categorization and integration of enterprise structured data from heterogeneous sources for future use. The operational schemas are prebuilt for each relevant business requirement, typically following the ETL (Extract-Transform-Load) process in a Data Pipeline. Enterprise Data Warehouse Operational Schemas updates could be challenging and expensive; Serves best for Data Analytics & Business Intelligence but are limited to particular problem-solving.

- Data Lake is an extensive centralized data store that hosts the collection of semi-structured & unstructured raw data. Data Lakes enable comprehensive analysis of big and small data from a single location. Data is extracted, loaded, and transformed (ELT) at the moment when it is necessary for analysis purposes. Data Lake makes historical data in its original form available to researchers for operations excellence and innovation anytime. Data Lakes integration for Business Intelligence & Data Analytics could be complex; best for Machine Learning and Artificial Intelligence tasks.

- Data Lakehouse combines the best elements of Data Lakes & Data Warehouses. Data Lakehouse provides Data Storage architecture for organized, semi-structured, and unstructured data in a single location. Data Lakehouse delivers Data Storage services for Business Intelligence, Data Analytics, Machine Learning, and Artificial Intelligence tasks in a single platform.

- Data Mart is a subset of a Data Warehouse usually focused on a particular line of business, department, or subject area. Data Marts make specific data available to a defined group of users, which allows those users to quickly access critical insights without learning and exposing the Enterprise Data Warehouse.

- Data Mesh:: The Enterprise Data Platform hosts data in a centralized location using Data Stores such as Data Lake, Data Warehouse, and Data LakeHouse by a specialized enterprise data team. The monolithic Data Centralization approach slows down adoption and innovation. Data Mesh is a sociotechnical approach for building distributed data architecture leveraging Business Data Domain that provides Autonomy to the line of business. Data Mesh enables cloud-native architectures to deliver data services for business agility and innovation at cloud speed. Data Mesh is emerging as a mission-critical data architecture approach for enterprises in the era of Artificial Intelligence. The Data Mesh adoption enables the Enterprise Journey to Data Democratization.

- Data Pipeline is a method that ingests raw data into Data Store such as Data Lake, Data Warehouse, or Data Lakehouse for analysis from various Enterprise Data Sources. There are two types of Data pipelines; first batch processing and second streaming data. The Data Pipeline Architecture core step consists of Data Ingestion, Data Transformation (sometimes optional), and Data Store.

- Data Fabric is an architecture and set of data services that provide consistent capabilities that standardize data management practices and practicalities across the on-premises, cloud, and edge devices.

- Data Ops is a specialized Dev Ops / Dev Sec Ops that demands collaboration among Dev Ops teams with data engineers & scientists for improving the communication, integration, and automation of data flows between data managers and data consumers across an enterprise. Data Ops is emerging as a mission-critical methodology for enterprises in the era of business agility.

- Data Security needs no introduction. Enterprise Data Platforms must address the Availability and Recoverability of the Data Services for Authorized Users only. Data Security Architecture establishes and governs the Data Storage, Data Vault, Data Availability, Data Access, Data Masking, Data Archive, Data Recovery, and Data Transport policies that comply with Industry and Enterprise mandates.

- Data Hub architecture enables data sharing by connecting producers with consumers at a central data repository with spokes that radiate to data producers and consumers. Data Hub promotes ease of discovery and integration to consume Data Services.

In simple words, digital transformation is all about enabling services to consume with ease internally and externally. Everyone is busy planning and executing Digital Transformation in their business context. Digital transformation is a journey, not a destination. Digital Transformation 3As Agility, Autonomy, and Alignment give us a framework to monitor the success of an enterprise's digital transformation journey.

- Agility: Enterprise services capability to work with insight, flexibility, and confidence to respond to challenging and changing consumer demands.

- Autonomy: Enterprise Architecture that promotes decentralization. The digital transformation journey needs that enterprises provide Autonomy at the first level to make the decisions by services owners.

- Alignment: Alignment is not a question of everyone being on the same page - everyone needs to understand the whole page conceptually. The digital transformation journey needs that everyone has visibility into the work happening around them at the seams of their responsibilities.

The digital transformation journey without 3As is like life without a soul. Continuously establish the purpose fit enterprise 3As to monitor innovation economics.

03/30: SixR

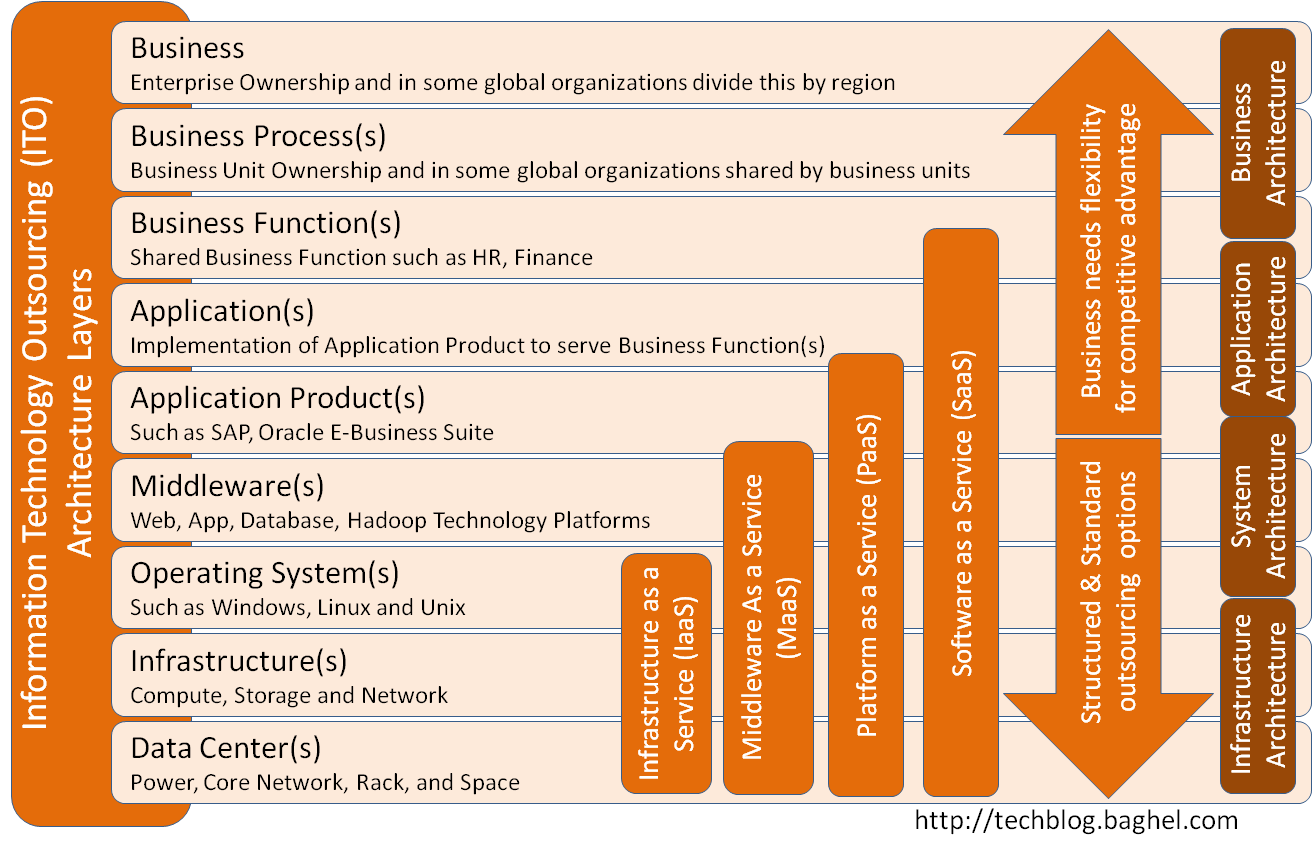

Enterprise Applications Rationalization (EAR) produces an Applications Disposition Plan (ADP) for starting or continuing an enterprise Journey 2 Cloud(J2C). The Applications Disposition Plan refers to the following SixR:

- Retire: Either the Business Function can be served by another Application in the Portfolio or Not in Use

- Retain: Presently Optimized keep As is or Not in Scope or No Business Value in Changing Deployment

- Rehost: Lift-n-Shift or Tech Refresh to Infrastructure As a Service(IaaS) Deployment

- Replace: Replace the business function with Software As a Service(SaaS) Deployment

- Replatform: Replace the systems architecture with Function As a Service(FaaS), Platform As a Service(PaaS), or Container As a Service(CaaS) Deployment

- Rewrite: Rewrite with Cloud Native, or Open Source Architectures and Technologies

infrastructure Technology Outsourcing Partner (iTOP) - Partner works with the enterprise infrastructure owners to co-create the standard infrastructure Technology services for the enterprise.

infrastructure Services Innovation Partner (iSIP) - Partner takes a multi-step approach. First, baseline the business roadmap. Second, baseline the roadmap of the applications in alignment with the business roadmap. Then, the partner develops the enterprise infrastructure services catalog in collaboration with business, applications, and infrastructure owners for business agility. The catalog is either composite in nature built using standard infrastructure services or custom-built services enabling business innovation and agility.

infrastructure Services Innovation Partner (iSIP) - Partner takes a multi-step approach. First, baseline the business roadmap. Second, baseline the roadmap of the applications in alignment with the business roadmap. Then, the partner develops the enterprise infrastructure services catalog in collaboration with business, applications, and infrastructure owners for business agility. The catalog is either composite in nature built using standard infrastructure services or custom-built services enabling business innovation and agility.

12/25: Technology Stack

The word Technology Stack means different things to different people due to their roles and responsibility. The context of Technology Stack also changes it's contents such as Application Technology Stack versus Server Technology Stack that hosts an Application Component(s). The Application Technology Stack serves the Strategy and Design needs while Server Technology Stack serves the Implementation and Engineering needs as a result the Application Technology Stack drives the Server Technology Stack.

The Technology Stack schema elements are given below:

Application Technology Stack may have multiple Server Platform and Operating System while Server Technology Stack will have only one Server Platform and associated Operating System. Application and Server Technology Stacks both can have multiple Middleware Platforms and Application Products.

The Technology Stack schema elements are given below:

- Server Platform such as x86, Mainframe, Mac, Solaris

- Operating System such as Windows, Linux, Solaris

- Middleware - Web, App and Database Platforms

- Application Products

- Commercial-Off-the-Shelf (COTS)

- Opensource-off-the-shelf (OOTS)

- Hardware and Software Appliances such as Google Search Appliance

Application Technology Stack may have multiple Server Platform and Operating System while Server Technology Stack will have only one Server Platform and associated Operating System. Application and Server Technology Stacks both can have multiple Middleware Platforms and Application Products.

12/25: Applications Metadata

The Applications Metadata is the information about an application that provides high level quick insight into the underlying business architecture, application architecture, system architecture and infrastructure architecture of the application. The Applications Metadata of an enterprise portfolio is a key foundation to develop IT strategy that includes cloud strategy, applications and infrastructures rationalization strategy, data center migration and consolidation strategy, technology refresh strategy, security strategy, and business continuity & disaster recovery strategy for the enterprise.

Sample Schema Elements for Applications Metadata:

Sample Schema Elements for Applications Metadata:

- Core Information

- applicationName

- description

- serviceUrls

- applicationType

- businessFunction

- businessCriticality

- userBase

- userType

- securityAndCompliance

- cloudReadiness

- roadMap

- knownIssues

- comments

- Target SLAs

- Site

- System

- Service

- Implementation Information

- implementationType

- technologyStack

- applicationArchitecture

- applicationDependencies

- System Maintenance

- blackoutPeriod

- maintenanceWindow

- maintenanceTypes

- Sizing Information

- userBaseSize

- transactionVolume

- transactionRate

- operatingSystemsInstances

- webAppServersInstances

- databaseServersInstances

- databaseSize

- filesystemSize

- Software / System Deployment Life Cycle (SDLC)

- type

- lifeCyclePath

- lifeCycleDuration

- testingType

- testingDuration

- state

- Backup and Disaster Recovery

- performanceObjective

- RPO

- RTO

- deploymentState

- Contacts

- business

- development

- testing

- productionSupport

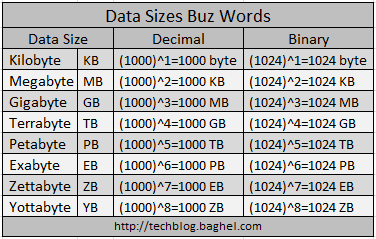

11/30: Data Sizes Buz Words

11/16: ITO Architecture Layers

01/28: What is SPF?

SPF stands for Sender Policy Framework that facilitates a validation process to allow email receivers to find out if the Simple Mail Transfer Protocol(SMTP) relay is allowed to relay email for users from a site domain like aol.com, yahoo.com, gmail.com etc.

This helps mark email messages as SPAM sent using someone else email address by the email receiving also known as Mail Exchanger(MX) servers. Enterprise email systems often use SPF framework to prevent SPAM messages delivery to their employees.

It is one of the industry leading standards to identify SPAM. The alternate frameworks to identify SPAM are Domain Keys IM aka DKIM and Sender ID.

SPF implementation depends on the internet’s lifeline Domain Name System TXT Resource Record for a site domain in the authoritative DNS server. Presently, SPF version 1 specifications are in use that helps site domain owner to publish the authorized SMTP relays.

SPF mechanism prefix are “+” Pass, “-“ Fail, “~” SoftFail, and “?” Neutral. Evaluations of an Domain SPF Records and Intended Actions are given below:

It is highly recommended that you setup SPF or similar framework like Sender ID or DKIM for your mail domain to allow MX servers to identify email SPAM and to ensure outbound email delivery. It also helps you protect your brand image by not allowing the SPAMMERS to use your mail domain to send emails.

This helps mark email messages as SPAM sent using someone else email address by the email receiving also known as Mail Exchanger(MX) servers. Enterprise email systems often use SPF framework to prevent SPAM messages delivery to their employees.

It is one of the industry leading standards to identify SPAM. The alternate frameworks to identify SPAM are Domain Keys IM aka DKIM and Sender ID.

SPF implementation depends on the internet’s lifeline Domain Name System TXT Resource Record for a site domain in the authoritative DNS server. Presently, SPF version 1 specifications are in use that helps site domain owner to publish the authorized SMTP relays.

SPF mechanism prefix are “+” Pass, “-“ Fail, “~” SoftFail, and “?” Neutral. Evaluations of an Domain SPF Records and Intended Actions are given below:

It is highly recommended that you setup SPF or similar framework like Sender ID or DKIM for your mail domain to allow MX servers to identify email SPAM and to ensure outbound email delivery. It also helps you protect your brand image by not allowing the SPAMMERS to use your mail domain to send emails.

05/02: What is TIA-942?

TIA-942 is a Data Center Standards publication developed by the Telecommunications Industry Association (TIA) to set guidelines for planning and building data centers, particularly with regard to cabling systems and network design. The standard deals with both copper and fiber optic media.

Disclaimer

The views expressed in the blog are those of the author and do not represent necessarily the official policy or position of any other agency, organization, employer, or company. Assumptions made in the study are not reflective of the stand of any entity other than the author. Since we are critically-thinking human beings, these views are always subject to change, revision, and rethinking without notice. While reasonable efforts have been made to obtain accurate information, the author makes no warranty, expressed or implied, as to its accuracy.

Reference Architecture to Assess, Design & Build Enterprise Data Platform

Reference Architecture to Assess, Design & Build Enterprise Data Platform