Category: Best Practices

Posted by: bagheljas

Availability and Applications of Data have emerged as a business innovation engine of the present time and for the foreseeable future.

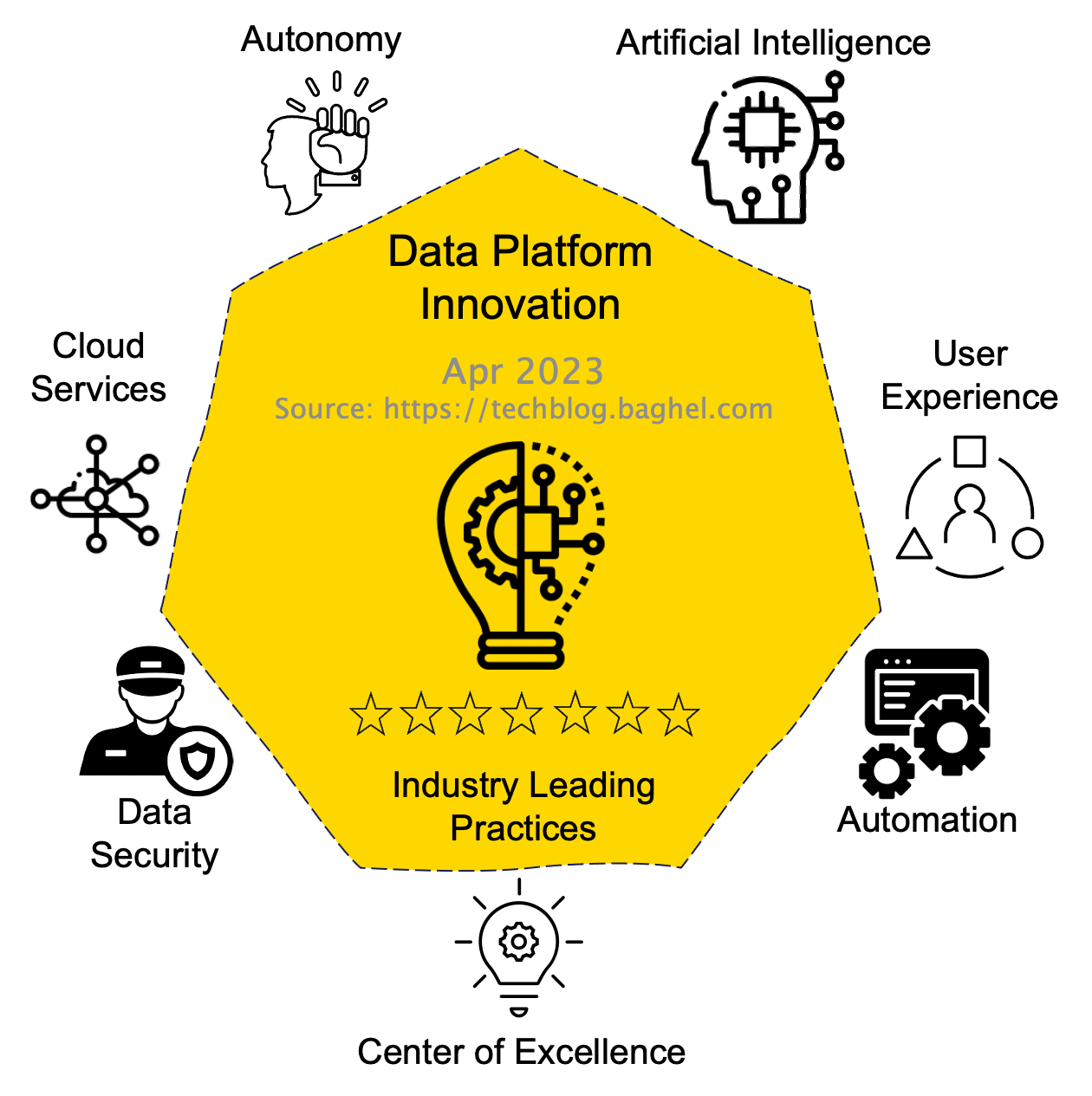

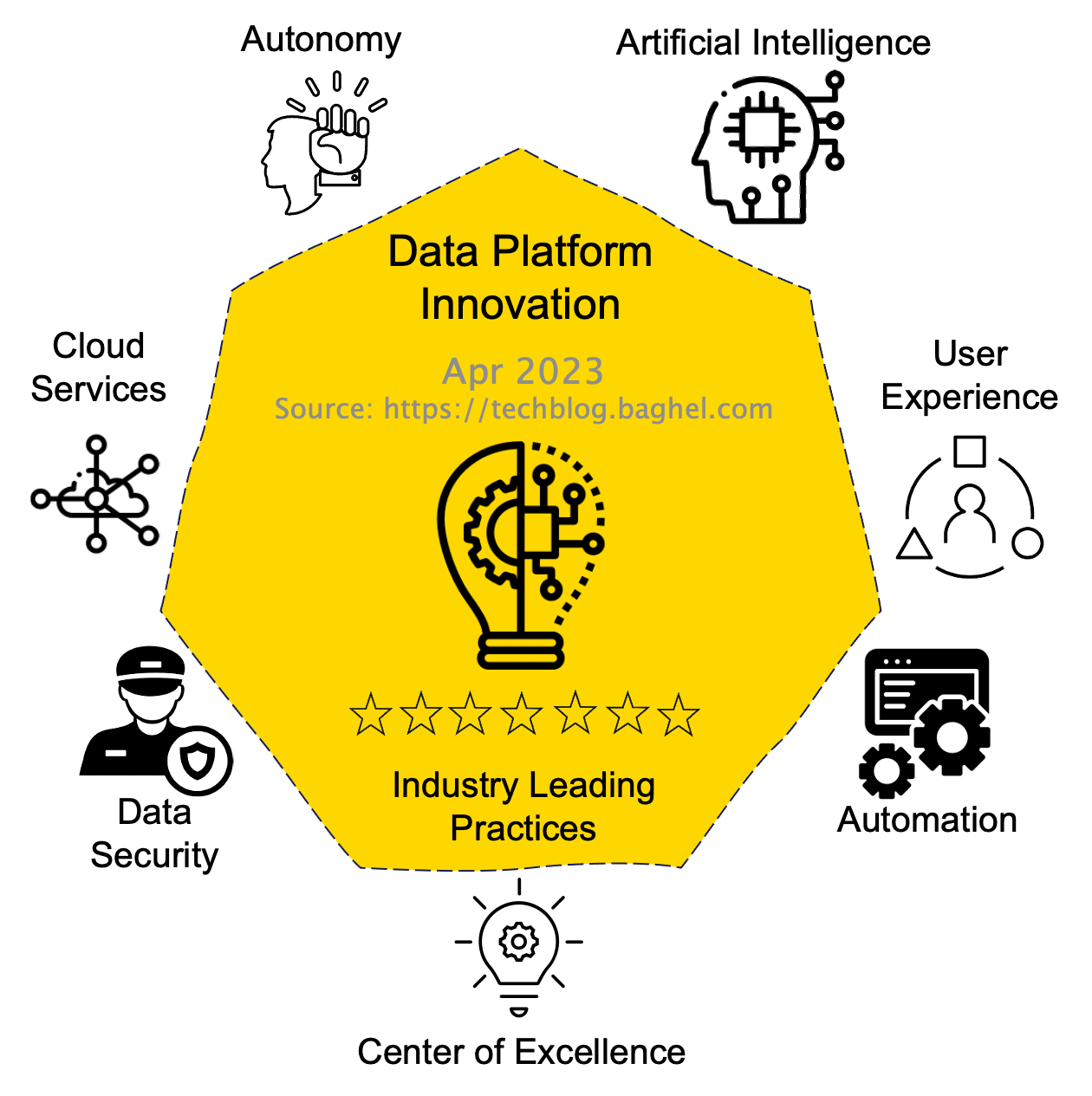

Data Platforms are a conglomerate of Business Requirements, Architectures, Tools & Technologies, Frameworks, and Processes to provide Data Services. Hence, the Data Platform Innovation foundation is from people, processes, and technology managing and utilizing an enterprise Data Platform. In the article, I have organized the emerging Industry Leading Practices into seven Pillars to maximize data value at speed in an enterprise environment.

Pillars - Data Platform Innovation: Industry Leading Practices

Data Platforms are a conglomerate of Business Requirements, Architectures, Tools & Technologies, Frameworks, and Processes to provide Data Services. Hence, the Data Platform Innovation foundation is from people, processes, and technology managing and utilizing an enterprise Data Platform. In the article, I have organized the emerging Industry Leading Practices into seven Pillars to maximize data value at speed in an enterprise environment.

Pillars - Data Platform Innovation: Industry Leading Practices

- Autonomy

- Implement Data as a Product with an operating model that establishes data product owner and team.

- Support Data Democratization utilizing Distributed Data Architecture and Data Mesh.

- Enable end-to-end service delivery ownership to the Data product owner.

- Artificial Intelligence (AI)

- Create a raw Data copy availability to enable AI Data Models yet to be discovered.

- Utilize AI Tools to manage Data identification, correction, and remediation of Data quality issues.

- User Experience

- Create and manage data literacy and data-driven cultural activities for employees to learn and embrace the value of data.

- Enable data navigation and data research tools for employees.

- Automation

- Utilize DataOps at the heart of provisioning, processing, and information management to deliver real-time use cases.

- Implement automatic backup and restoration of Data and digital twins of the Data estate.

- Center of Excellence

- Shift from stakeholders' buy-in approach to delivery partners' approach that finds and enables innovation.

- Create Data Eco-System utilizing Data Alliances, Data Sharing Agreements, and Data Marketplace to develop an Enterprise Data Economy.

- Publish Common Data Models, Policies, and Processes to promote ease of collaboration within and across organizations.

- Data Security

- Contribute actively to individual data-protection awareness and rights.

- Communicate the importance of data security throughout the organization.

- Develop Data privacy, Data ethics, and Data security as areas of competency, not just to comply with mandates.

- Cloud Services

- Cloud First mindset for quickly exploring and adopting innovation at speed with minimal sunk cost once that becomes mainstream. Let the business model drive the Cloud equilibrium.

- Enable cloud for flexible data model tools supporting querying for unstructured data.

- Enable edge devices and high-performance computing available at Data sources to deliver real-time use cases.

Category: Best Practices

Posted by: bagheljas

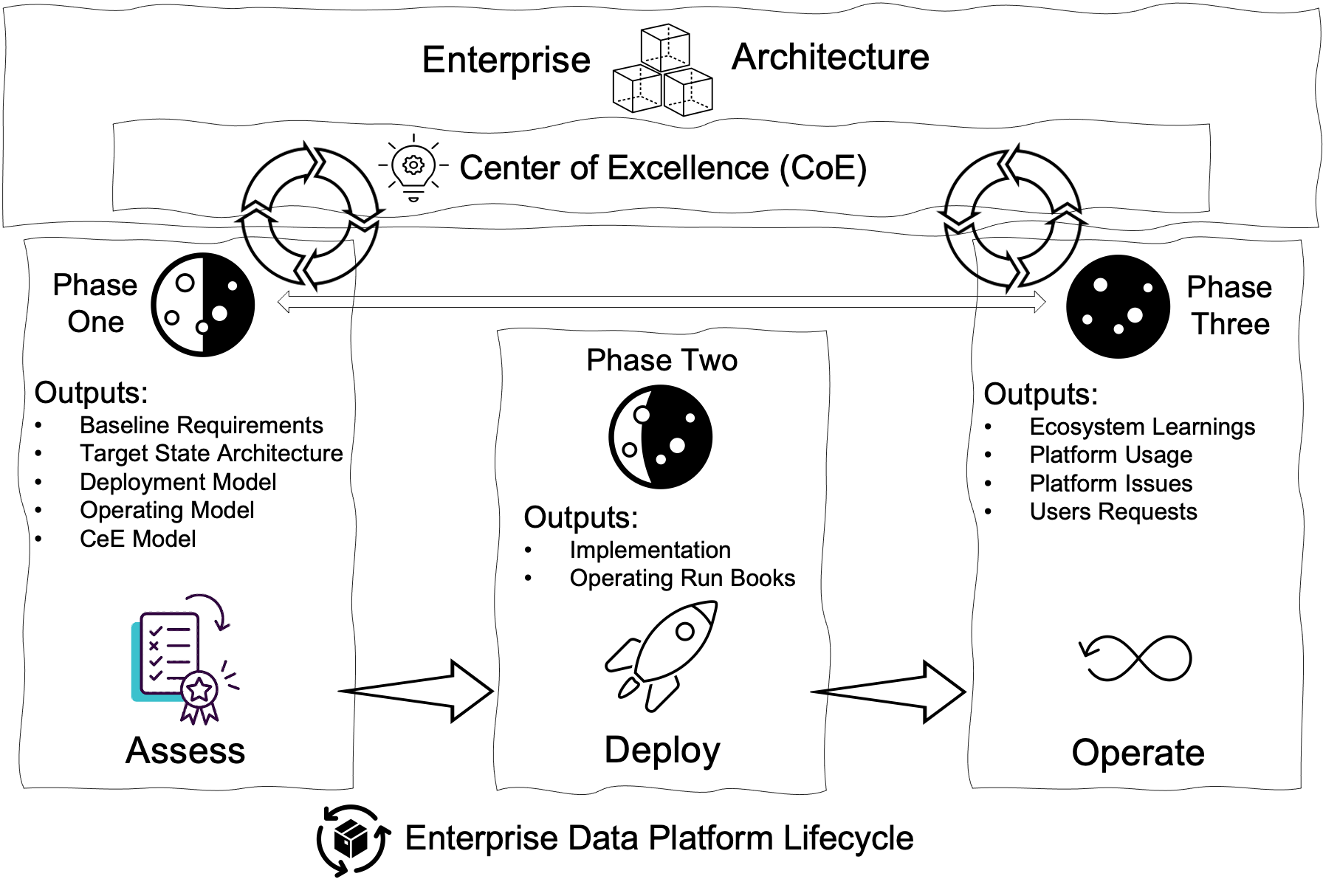

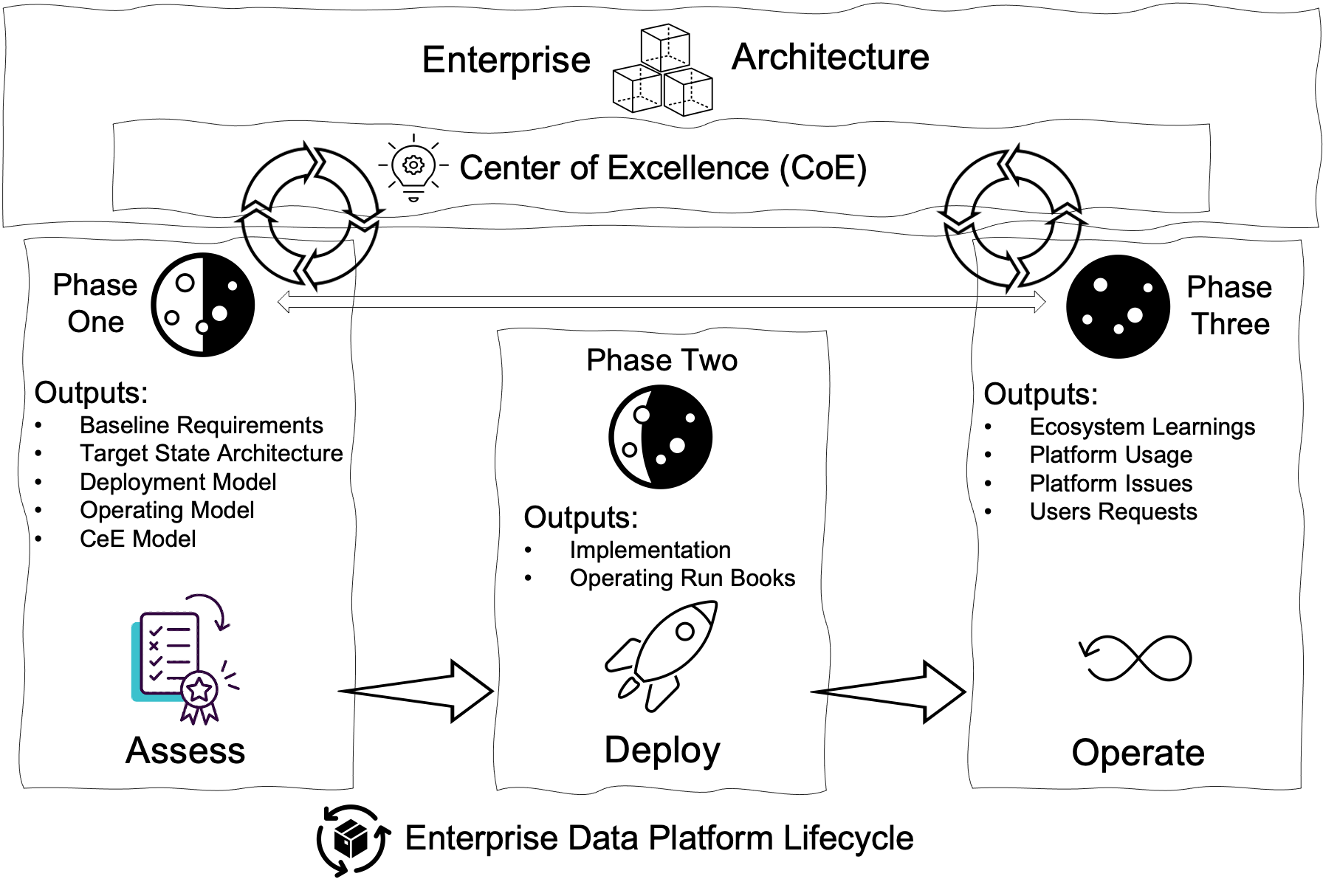

Industry-leading Process uniquely establishes the People, Process, and Technology popularly known as PPT Models for the Deployment Model, Operating Model, and Center of Excellence for the enterprises for the new implementations of an Enterprise Data Platform. While for the existing Enterprise Data Platform, we review and revise these Models. The process consists of three phases Assess, Deploy, and Operate.

I strongly believe in that Enterprise Data Platform Modernization is an Enterprise Journey, not a Milestone. Hence, the process loops back inputs from the Operating Model and Center of Excellence to review and revise PPT Models of the Deployment Model, Operating Model, and Center of Excellence for the Enterprise Data Platform. We highly recommend performing this minimum of twice a calendar year due to the speed and volume of innovations in the data domain.

Category: Best Practices

Posted by: bagheljas

Industry Leading Approach is to create an Enterprise Data Economy built on Data Collaboration, Data Democratization, and improved User Experiences for Data Research and Artificial Intelligence. It has ingrained Data Privacy, Security, and Resiliency capabilities. The solution design uses the following guiding principles those are from the article, Data Platform Innovation: Industry Leading Practices.

Use Reference Architecture from the article, Data Platform Buzzwords: Introduction and So What?, to assess and design the Data Platform Target State Architecture.

- Cloud First while extracting the life of current IT investments

- Utilize Data Ops to automation for Data Services and Backup & Restore Data

- Utilize Distributed Data Architecture and Data Mesh for Data Democratization

- Design for real-time use cases and ease of data research tools integration

- Flexible Data Stores with anytime availability of the Raw Data

- Identify and Use AI Tools to Handle Data Quality Issues

- Utilize Data Hub, Data Fabric, and Data Navigation for ease of Data Discovery and Collaboration

Use Reference Architecture from the article, Data Platform Buzzwords: Introduction and So What?, to assess and design the Data Platform Target State Architecture.

Shortly after earning a Master of Science in Operations Research and Statistics at the Indian Institute of Technology (IIT Bombay), I joined as a Research Scientist in the Artificial Intelligence Lab for the Computer Science Department. I developed an AI application for routing and scheduling crews that delivered operations efficiency of 36% for our customers and won numerous awards and national recognition.

Later, moving to the United States, I worked as a Software Engineer with companies such as AT&T and IBM. One key highlight was developing security architecture for single sign-on for over 200 mission-critical apps at AT&T. It was followed by a stint with NLM, where I delivered Data Integration between the NLM and FDA, Search Engine, and Conversational AI for self-service.

Next, I worked at Aol as an Operations Architect, a key role in enabling billing and subscription services and setting up enterprise standards and best practices. While at Aol, I earned a Master of Science in Technology Management at George Mason University and a Chief Information Officer Certificate at United States Federal CIO University.

Over the last ten years, I have led consulting, solution center, and pre-sales at SRA International / General Dynamics Information Technology, CenturyLink / Savvis / Lumen, and IBM / Kyndryl. Notable Accomplishments in Apps and Data Space:

- SRA International / General Dynamics Information Technology: Executed the modernization of the Centers for Disease Control and Prevention's vaccine adverse event reporting system (The Largest Program in the World) to enable self-service and green initiatives to deliver digital reporting and remote work options.

- IBM / Kyndryl: Onboarded a large new logo client in the insurance industry to adopt Hybrid Cloud, DevOps, and API-driven managed services to deliver on-demand one-click Guidewire Apps environments, reducing the environment delivery timeline from 45 days to 8 hours.

- Kyndryl: Developed an advanced Banking Payments Ecosystem Transaction Logs Data Mining Tool using Python. This efficient tool enables platform engineering and operations teams to accurately predict transaction performance while providing valuable recommendations for optimizing the ecosystem.

I have co-founded:

Learn more about me:

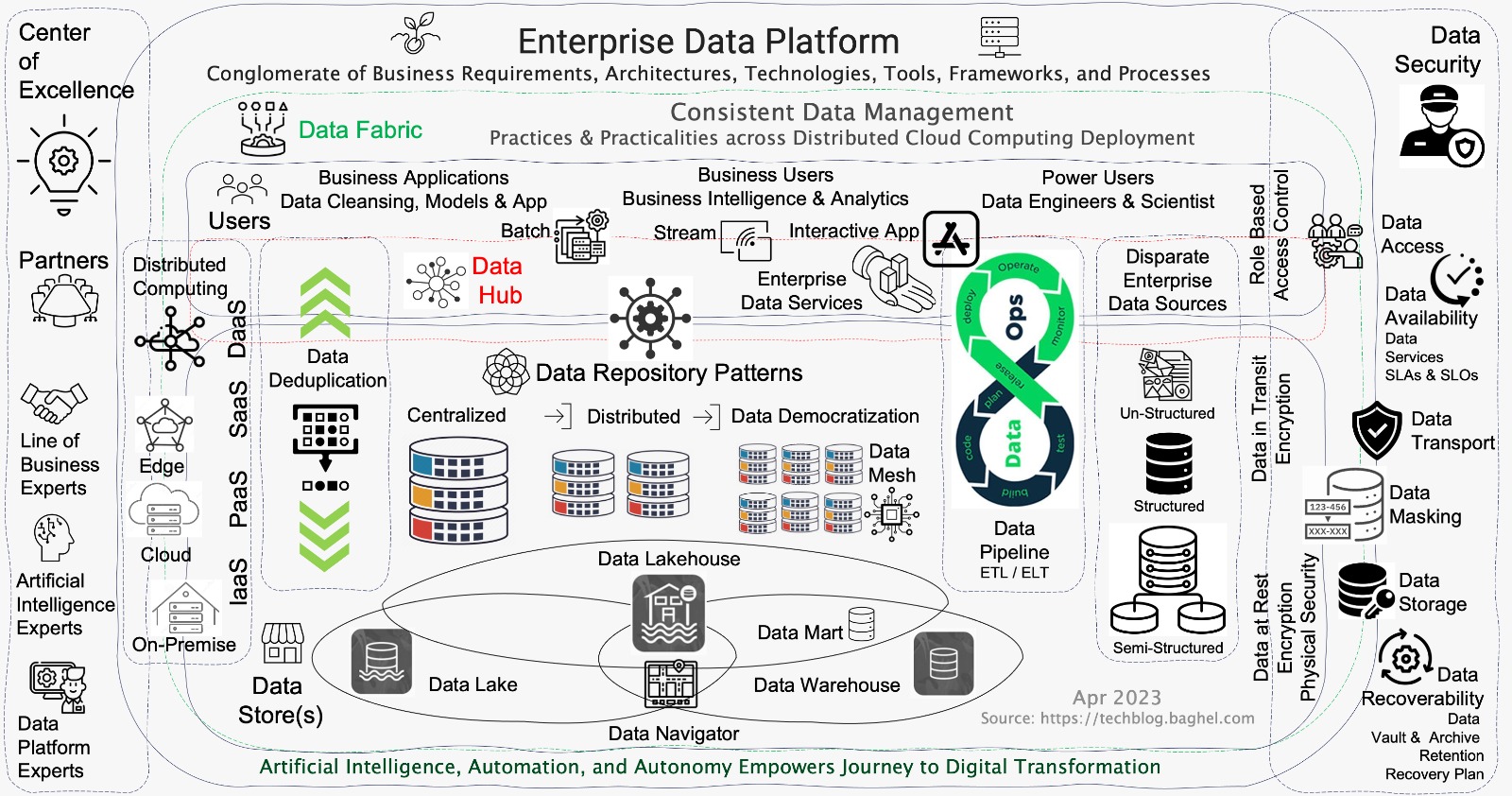

Modern Enterprise Data Platforms are a conglomerate of Business Requirements, Architectures, Tools & Technologies, Frameworks, and Processes to provide Data Services.

Data Platforms are mission-critical to an enterprise, irrespective of size and industry sector. Data Platform is the foundation for Business Intelligence & Artificial Intelligence to deliver sustainable competitive advantage for business operations excellence and innovation.

Data Platforms are mission-critical to an enterprise, irrespective of size and industry sector. Data Platform is the foundation for Business Intelligence & Artificial Intelligence to deliver sustainable competitive advantage for business operations excellence and innovation.

- Data Store is a repository for persistently storing and managing collections of structured & unstructured data, files, and emails. Data Warehouse, Data Lake, and Data Lakehouse with Data Navigator are the specialized Data Stores implementations to deliver Modern Enterprise Data Platforms. Data Deduplication is a technique for eliminating duplicate copies of repeating data. Successful implementations of Data Deduplication optimize storage & network capacity needs of Data Stores is critical in managing the cost and performance of Modern Enterprise Data Platforms.

- Data Warehouse enables the categorization and integration of enterprise structured data from heterogeneous sources for future use. The operational schemas are prebuilt for each relevant business requirement, typically following the ETL (Extract-Transform-Load) process in a Data Pipeline. Enterprise Data Warehouse Operational Schemas updates could be challenging and expensive; Serves best for Data Analytics & Business Intelligence but are limited to particular problem-solving.

- Data Lake is an extensive centralized data store that hosts the collection of semi-structured & unstructured raw data. Data Lakes enable comprehensive analysis of big and small data from a single location. Data is extracted, loaded, and transformed (ELT) at the moment when it is necessary for analysis purposes. Data Lake makes historical data in its original form available to researchers for operations excellence and innovation anytime. Data Lakes integration for Business Intelligence & Data Analytics could be complex; best for Machine Learning and Artificial Intelligence tasks.

- Data Lakehouse combines the best elements of Data Lakes & Data Warehouses. Data Lakehouse provides Data Storage architecture for organized, semi-structured, and unstructured data in a single location. Data Lakehouse delivers Data Storage services for Business Intelligence, Data Analytics, Machine Learning, and Artificial Intelligence tasks in a single platform.

- Data Mart is a subset of a Data Warehouse usually focused on a particular line of business, department, or subject area. Data Marts make specific data available to a defined group of users, which allows those users to quickly access critical insights without learning and exposing the Enterprise Data Warehouse.

- Data Mesh:: The Enterprise Data Platform hosts data in a centralized location using Data Stores such as Data Lake, Data Warehouse, and Data LakeHouse by a specialized enterprise data team. The monolithic Data Centralization approach slows down adoption and innovation. Data Mesh is a sociotechnical approach for building distributed data architecture leveraging Business Data Domain that provides Autonomy to the line of business. Data Mesh enables cloud-native architectures to deliver data services for business agility and innovation at cloud speed. Data Mesh is emerging as a mission-critical data architecture approach for enterprises in the era of Artificial Intelligence. The Data Mesh adoption enables the Enterprise Journey to Data Democratization.

- Data Pipeline is a method that ingests raw data into Data Store such as Data Lake, Data Warehouse, or Data Lakehouse for analysis from various Enterprise Data Sources. There are two types of Data pipelines; first batch processing and second streaming data. The Data Pipeline Architecture core step consists of Data Ingestion, Data Transformation (sometimes optional), and Data Store.

- Data Fabric is an architecture and set of data services that provide consistent capabilities that standardize data management practices and practicalities across the on-premises, cloud, and edge devices.

- Data Ops is a specialized Dev Ops / Dev Sec Ops that demands collaboration among Dev Ops teams with data engineers & scientists for improving the communication, integration, and automation of data flows between data managers and data consumers across an enterprise. Data Ops is emerging as a mission-critical methodology for enterprises in the era of business agility.

- Data Security needs no introduction. Enterprise Data Platforms must address the Availability and Recoverability of the Data Services for Authorized Users only. Data Security Architecture establishes and governs the Data Storage, Data Vault, Data Availability, Data Access, Data Masking, Data Archive, Data Recovery, and Data Transport policies that comply with Industry and Enterprise mandates.

- Data Hub architecture enables data sharing by connecting producers with consumers at a central data repository with spokes that radiate to data producers and consumers. Data Hub promotes ease of discovery and integration to consume Data Services.

In simple words, digital transformation is all about enabling services to consume with ease internally and externally. Everyone is busy planning and executing Digital Transformation in their business context. Digital transformation is a journey, not a destination. Digital Transformation 3As Agility, Autonomy, and Alignment give us a framework to monitor the success of an enterprise's digital transformation journey.

- Agility: Enterprise services capability to work with insight, flexibility, and confidence to respond to challenging and changing consumer demands.

- Autonomy: Enterprise Architecture that promotes decentralization. The digital transformation journey needs that enterprises provide Autonomy at the first level to make the decisions by services owners.

- Alignment: Alignment is not a question of everyone being on the same page - everyone needs to understand the whole page conceptually. The digital transformation journey needs that everyone has visibility into the work happening around them at the seams of their responsibilities.

The digital transformation journey without 3As is like life without a soul. Continuously establish the purpose fit enterprise 3As to monitor innovation economics.

05/09: Resume

JASWANT SINGH

Email: [email protected]

Text / Voice / WhatsApp: 703.662.1097

US Citizen Security Clearance: POT 6C

Reston, Virginia

APPS, DATA, CLOUD, and INTEGRATION TECHNOLOGY EXECUTIVE

My Blog: https://techblog.baghel.com

LinkedIn: https://www.linkedin.com/in/jaswantsingh

Email: [email protected]

Text / Voice / WhatsApp: 703.662.1097

US Citizen Security Clearance: POT 6C

Reston, Virginia

APPS, DATA, CLOUD, and INTEGRATION TECHNOLOGY EXECUTIVE

My Blog: https://techblog.baghel.com

LinkedIn: https://www.linkedin.com/in/jaswantsingh

EXECUTIVE SUMMARY

Experienced Apps, Data, Cloud, and Integration Executive with over 25 years of driving digital transformation for Fortune 500 companies, generating over $960 million in global revenue through Azure, AWS, GCP, OCI, IBM Cloud, VMware, and Red Hat from ideation to launch.

KEY ACCOMPLISHMENTS

- Cloud Computing Evangelist: Delivered keynote on SaaS best practices at SaaSCon, SantaClara, California, and developed a Cloud Adoption Strategy framework for hybrid IT, accelerating enterprise modernizations.

- Data, AI & ML Platform Leader: Headed Big Data practice at CenturyLink, delivering strategy, implementation, and managed services that drove growth for financial services and omnichannel retail clients.

- Site Reliability & DevSecOps: Deployed enterprise SOA and led DevSecOps for billing and subscription applications at Aol, improving agility and operational efficiency.

CORE COMPETENCIES

- Leadership: Global team management, coaching/mentoring, enterprise architecture, P&L oversight, financial engineering, analytical problem-solving.

- Data & Cloud: Data ecosystems (democratization, architectures: Warehouse / Lake / Lakehouse / DataMart / Mesh; security, hubs, fabrics); Multi-cloud mastery (Azure, AWS, GCP, OCI, IBM Cloud, VMware, OpenShift) across SaaS / PaaS / CaaS / IaaS / Serverless / Cloud-Native.

- Apps & Automation: App / software / system / infra implementation / rationalization / modernization / migration; CI/CD pipelines, DevSecOps, service / resource automation, IaC.

PROFESSIONAL EXPERIENCE

Kyndryl / IBM, Dulles, Virginia

JUNE 2018 - PRESENT

Director - Innovation and Integration, Global Cloud Practice; Client Technical Leader, US Financial Services; Chief Enterprise Architect, US CTO Office

- Provided C-level advisory and thought leadership for Digital Transformation and IT Outsourcing, co-creating enterprise solutions that generated over $360M in revenue.

- Developed framework for assessing application portfolios to create cloud disposition plans, roadmaps, and business opportunities.

- Designed and delivered industry-first cloud-native solutions for banking branch technology using NG One, Splunk, and Azure Cloud, enabling centralized management of banking branch peripherals for deployment and operations.

- Led the largest U.S. Guidewire Hybrid Cloud and DevSecOps implementation, delivering one-click API-driven environments that cut delivery timelines from 45 days to 8 hours.

- Developed a Python-based Banking Payments Ecosystem Transaction Logs Data Mining Tool, enabling platform engineering and operations teams to provide optimization recommendations and predict transaction performance.

Lumen Technologies / CenturyLink, Dulles, Virginia

OCTOBER 2011 - MAY 2018

Senior Director - Global Advanced Services Solution Center; Principal Enterprise Architect, Consulting and Implementation Services

- Directed cross-organization matrix teams for multimillion-dollar solutions in Applications and Infrastructure Rationalization, Modernization and Migration, Data Center Migration and Consolidation, and Business Resiliency. Created Cloud Readiness Assessment and Big Data Practice, driving Digital Transformation opportunities.

- Led strategic deal pursuits and onboarded new clients with 100% success rate; built and managed high-performing team of solutions architects, generating over $600M in revenue through global enterprise digital transformations.

Previous Professional Experience

JULY 1995 - OCTOBER 2011

Pioneered cloud and SOA architectures, operationalized billing systems, architected federal health applications, co-founded technology ventures, and advanced AI research for enterprise excellence.

- Chief Architect - Cloud and Service-Oriented Architecture (SOA) Center of Excellence, SRA International / General Dynamics Information Technology Inc.

- Operations Architect - Billing and Subscription, and Web and Java Center of Excellence,America Online (AOL Inc.).

- Software Architect - Applications Branch, Office of Computer and Communications Systems (OCCS), US National Library of Medicine / Lockheed Martin.

- Co-Founder and Chief Technology Officer, StrategiesTool, Suchna! / Baghel Corporation.

- Senior Software Engineer - Interactive Advantage, AT&T / IBM Global Services.

- Research Scientist and Founding Member, Mumbai Educational Trustís Institute of Software Development and Research.

- Research Scientist - Management of Informatics and Technology for Enterprise Excellence (MINT-EX), Artificial Intelligence Research Lab, Indian Institute of Technology Bombay.

PUBLICATIONS

- Cloud Migration & Modernization Solution Design Options, and Industry Leading Practices, Microsoft Azure Architect Technologies & Design, Presented at IBM Your Learning

- Getting Started with Oracle SOA BPEL Process Manager, Packt Publishing, ISBN 978-1-84968-898-7

- Best Practices for Developing & Deploying SaaS, White Paper, Presented at SaaSCon, Santa Clara, California

- Web Application Security Implementation and Industry Best Practices Handbook, Presented at SRA University

EDUCATION

- United States Federal CIO University

CIO University Certificate, Federal Executive Competencies - Costello College of Business, George Mason University, Virginia

Master of Science in Technology Management - Department of Mathematics, Indian Institute of Technology Bombay

Master of Science in Mathematics, Specialization in Operations Research and Statistics

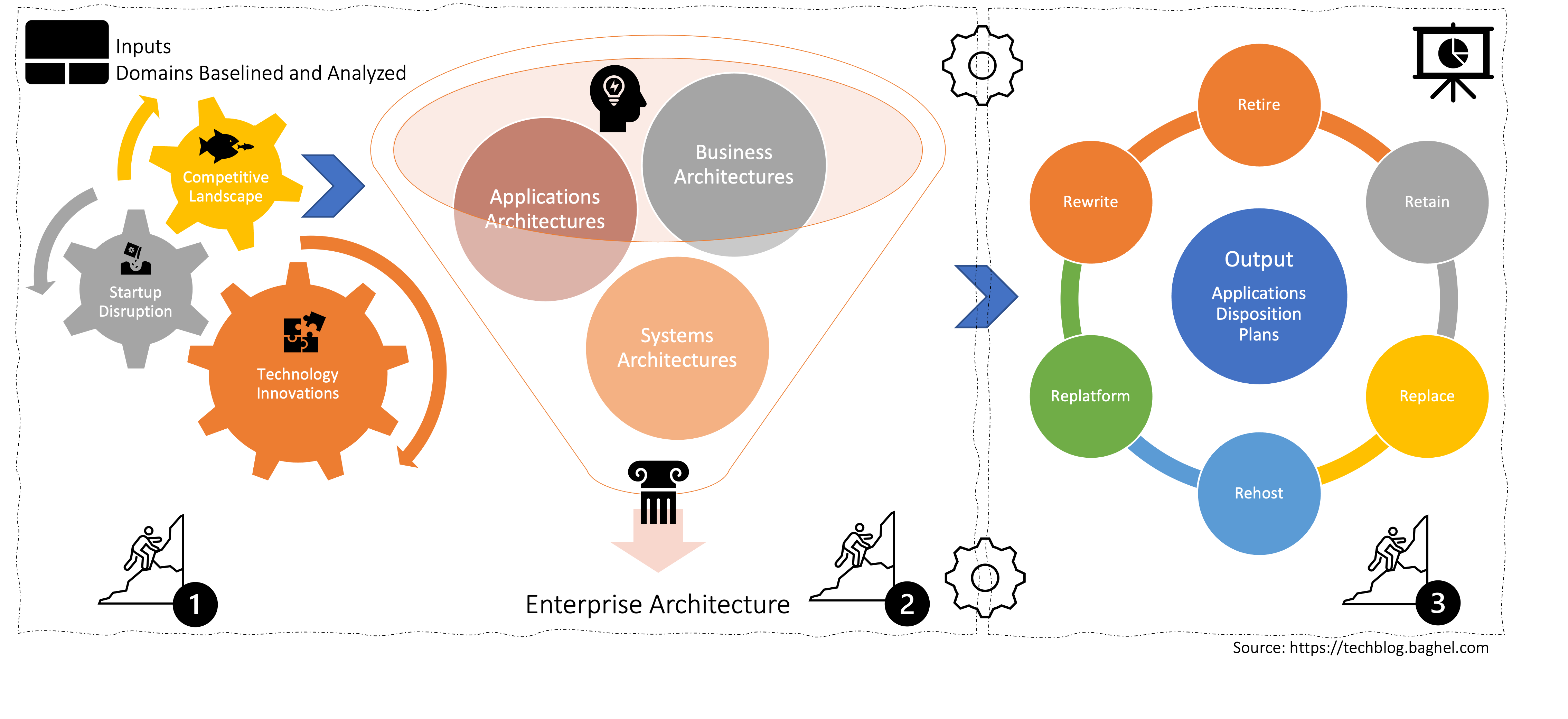

Introduction

Enterprise Applications Rationalization is a process to create business-driven application system architecture. That demands continuous input and collaboration across business, technology, and operations. We often find that enterprises lack support from business owners for the Enterprise Applications Rationalization. The Applications Disposition Strategy from applications architectures and runtimes only is a recipe for disaster down the line.

So What?

Enterprise Applications Rationalization is the mission-critical journey for any enterprise. Not doing it can put an enterprise in the same boat as Aol and Yahoo in a matter of time. The III-Phase approach enables an enterprise to stay relevant in the marketplace with the end-to-end alignment from a business model to the application runtime.

Phase-I:

Identifies Initiatives to adopt technology innovation to prepare for startup disruption and improve the competitive landscape.

Phase-II:

Build roadmaps for Business, Applications, Systems, and Enterprise Architecture for delivering Phase-I initiatives and issues solutions.

Phase-III:

Enterprise Applications Portfolio mapping into Retire, Retain, Replace, Rehost, Replatform, and Rewrite (SixR) in alignment with the objectives established in the previous Phases.

Enterprise Applications Rationalization is a process to create business-driven application system architecture. That demands continuous input and collaboration across business, technology, and operations. We often find that enterprises lack support from business owners for the Enterprise Applications Rationalization. The Applications Disposition Strategy from applications architectures and runtimes only is a recipe for disaster down the line.

So What?

Enterprise Applications Rationalization is the mission-critical journey for any enterprise. Not doing it can put an enterprise in the same boat as Aol and Yahoo in a matter of time. The III-Phase approach enables an enterprise to stay relevant in the marketplace with the end-to-end alignment from a business model to the application runtime.

Phase-I:

Identifies Initiatives to adopt technology innovation to prepare for startup disruption and improve the competitive landscape.

Phase-II:

Build roadmaps for Business, Applications, Systems, and Enterprise Architecture for delivering Phase-I initiatives and issues solutions.

Phase-III:

Enterprise Applications Portfolio mapping into Retire, Retain, Replace, Rehost, Replatform, and Rewrite (SixR) in alignment with the objectives established in the previous Phases.

03/30: SixR

Enterprise Applications Rationalization (EAR) produces an Applications Disposition Plan (ADP) for starting or continuing an enterprise Journey 2 Cloud(J2C). The Applications Disposition Plan refers to the following SixR:

- Retire: Either the Business Function can be served by another Application in the Portfolio or Not in Use

- Retain: Presently Optimized keep As is or Not in Scope or No Business Value in Changing Deployment

- Rehost: Lift-n-Shift or Tech Refresh to Infrastructure As a Service(IaaS) Deployment

- Replace: Replace the business function with Software As a Service(SaaS) Deployment

- Replatform: Replace the systems architecture with Function As a Service(FaaS), Platform As a Service(PaaS), or Container As a Service(CaaS) Deployment

- Rewrite: Rewrite with Cloud Native, or Open Source Architectures and Technologies

03/05: Digital BizCard

Disclaimer

The views expressed in the blog are those of the author and do not represent necessarily the official policy or position of any other agency, organization, employer, or company. Assumptions made in the study are not reflective of the stand of any entity other than the author. Since we are critically-thinking human beings, these views are always subject to change, revision, and rethinking without notice. While reasonable efforts have been made to obtain accurate information, the author makes no warranty, expressed or implied, as to its accuracy.

Reference Architecture to Assess, Design & Build Enterprise Data Platform

Reference Architecture to Assess, Design & Build Enterprise Data Platform Enterprise Applications Rationalization Process

Enterprise Applications Rationalization Process